KGraphLang is a knowledge graph query language designed for Ensemble Reasoning and Reasoning LLMs.

Reasoning LLMs are trained to split a request into steps and then consider and follow each step to complete the request, revising the steps as needed. Ensemble Reasoning is a method of taking advantage of this step-wise reasoning process to execute steps directly during the reasoning process. This dramatically improves the performance of A.I. Agents by eliminating the latency of switching between LLMs and tool calls.

More information about Ensemble Reasoning is available in the articles:

- https://blog.vital.ai/2025/01/21/ensemble-reasoning-with-the-deepseek-r1-and-qwen-qwq-llms/

- https://blog.vital.ai/2025/01/13/agents-and-ensemble-reasoning/

Why a new query language?

KGraphLang is designed to be simpler than query languages like SQL and graph query languages like SPARQL and OpenCypher.

By stripping the query language to just the essentials, we empower LLMs and fine-tuned SLMs to generate syntactically valid queries without ambiguity.

The loss of the more general and complex syntax of other query languages is made up for by defining domain specific predicates.

The KGraphLang specification defines predicates as relations between parameters. An application of KGraphLang defines domain specific predicates such as friend(?X,?Y) which defines a friend relation between parameters ?X and ?Y.

By defining good predicates we can choose exactly what the LLM can access and hide any complexity inside the predicate implementations, keeping the LLM interface clean and minimalist. This also allows direct control over what information the LLM can access as predicates act as the gateway to all data.

As an example, let’s consider a supply chain application.

Using KGraphLang, we can define predicates for supply chain cases such as:

- Product Information: product(?Product, ?Supplier, ?ProductInfo)

- Supplier Information: supplier(?Supplier, ?Product, ?SupplierInfo)

- Shipping Routes: route(?Source, ?Destination, ?Route)

- Weather Data and Predictions: weather(?Location, ?DateTime, ?Report)

- Delivery Cost Prediction: cost(?Route, ?Product, ?Cost)

- Performance Prediction: performance(?Supplier, ?Product, ?Route,

?Weather, ?Performance)

These predicates are implemented using queries to the underlying knowledge graph or via code, such as a weather service API.

The A.I. Agent, Porter, receives a report that the delivery of 500 controller motors that are critically needed in the manufacturing process of widgets for WidgetCo is delayed, and is given the task of finding an alternate source.

Porter can generate kgraphlang queries to lookup information using the predicates and find an alternate source of the controller motors that fit the needs of WidgetCo. These queries are processed as they are generated, so the reasoning trajectory can change as information is retrieved in real time.

Thus, Porter can generate and execute a kgraphlang query such as:

?MinCost = min { ?C |

?Supplier in ?Suppliers,

route(?Supplier, WidgetCo, ?Route),

cost(?Route, ControllerMotor, ?C)

}during reasoning to determine the minimum delivery cost given a list of suppliers, and have this cost estimate affect the next steps of Porter’s reasoning. The next step could be using the performance() predicate to estimate the likelihood of on-time delivery. Porter can decide to switch to a different supplier if the cost and delivery estimate is not acceptable. Much in the same way a Reasoning LLM can continue to reason on a math problem until a solution is found, a Reasoning LLM can continue to use kgraphlang queries until an acceptable solution is found, and the alternate source of controller motors is secured.

KGraphLang Predicates

Predicates define a relation between parameters. For knowledge graphs, these often are traversals on the knowledge graph. For instance,

friend(?Person1, ?Person2)could define a traversal on the graph from ?Person1 to ?Person2 along a relation or “edge” representing friendship.

These can be chained together, such as:

friend(?Person1, ?Person2), friend(?Person2, ?Person3)which would follow a two-hop path from ?Person1 to ?Person3 along “friendship” relations.

The kgraphlang predicates can directly be implemented in code, or use an underlying data source. For Ensemble Reasoning, the implementation uses KGraphService to implement predicates over a knowledge graph.

Besides traversing the knowledge graph, there are two other major types of predicates: Vector Similarity Predicates and String Hash Predicates.

Vector Similarity Predicates make use of vectors and vector search to find similar knowledge graph elements. This is critical to support “Graph RAG” functionality ( https://microsoft.github.io/graphrag/). A node representing a supplier in our earlier example could be similar to other suppliers if they supply similar products or are otherwise similar.

String Hash Predicates make use of string hashing and string hash searching to find text that is similar on a character by character basis. This can be helpful to match names like “Jon Smith” would be very similar to “John Smyth”, or to find documents that contain overlapping language.

KGraphLang Syntax

KGraphLang supports a comprehensive syntax while maintaining simplicity to enable high quality LLM query generation.

The syntax includes:

- Predicates implemented in code or via queries to an underlying data source

- Predicate annotation with extra-logical values like @top_k(10) to control predicate output

- Grouping and logical AND, OR, NOT

- Comparisons: >, <, >=, <=, !=

- Aggregation functions: collections, count, sum, average, max, min

- Math functions

- Data types: string, number, boolean, date, time, currency, geolocation, units, URIs

- Complex data types for List, Map

- Membership and Subset in Lists and Maps

- Single and multi-line comments

Here’s an example:

?uri_prop = 'urn:uri_prop'^URI,

?name_prop = 'urn:name_prop'^URI,

?email_prop = 'urn:email_prop'^URI,

?prop_list = [ ?uri_prop, ?name_prop, ?email_prop ],

person_uri_list(?PersonList),

?PersonEmailMapList = collection {

?PersonMapRecord |

?Pid in ?PersonList,

get_person_map(?Pid, ?prop_list, ?PersonMapRecord)

}.This retrieves a list of Person records, each of which having an id, name, and email address.

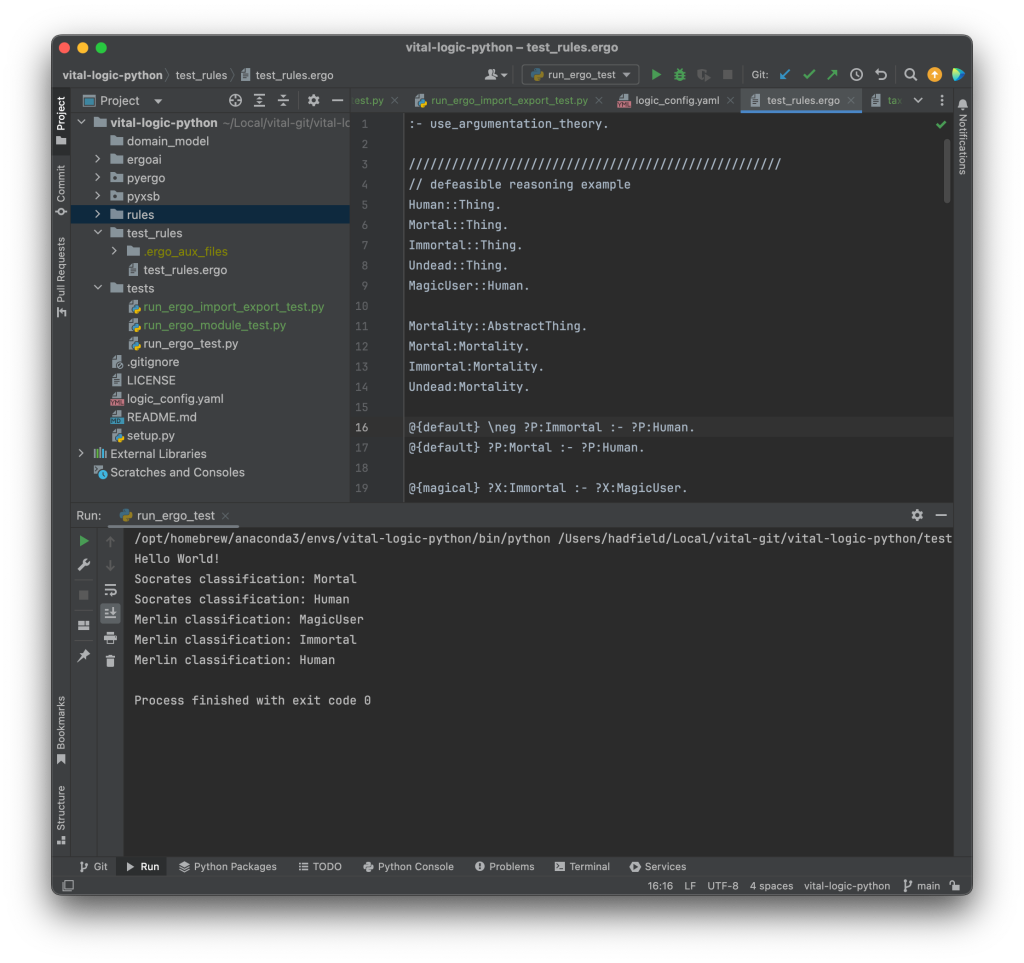

KGraphLang Implementation

The KGraphLang implementation evaluates kgraphlang queries based on a set of registered predicates.

The predicates are implemented either using an underlying data source or directly in code.

Predicates implemented using KGraphService use an underlying knowledge graph implemented by a graph and vector database. This means that, internally, the kgraphlang query is translated into a target query language such as OpenCypher, SPARQL, or GraphQL which is used with the implementing database. So, predicates are a means of bundling complex queries into simple chunks the LLM can easily work with.

An example of a predicate directly implemented in code could be:

weather(?Location, ?DateTime, ?Report)which could be implemented via an API call to a weather service to get an accurate weather report at the time the query is evaluated.

The KGraphLang implementation parses the query and then evaluates it, but there are cases when the parse can be used directly. Parsing the kgraphlang query produces an AST (abstract syntax tree), which can be manipulated first and then later evaluated. This is useful in cases of optimizing the query or replacing predicates with pre-cached values.

KGraphLang Fine-tuning

Reasoning LLMs such as R1-Distill-Llama are successful in producing valid KGraphLang queries using only prompting. However, fine-tuning should improve generation and reduce the prompting needed for KGraphLang requests to only the predicate definitions for that request. Also, fine-tuning SLMs should allow domain specific SLMs to produce valid KGraphLang queries with specific sets of predicates included in the training, making for a highly optimized query capability.

We’re in the process of collecting datasets to use for kgraphlang fine-tuning.

Source Code

All source code is open-source and available via GitHub.

KGraphLang is implemented in the repo:

https://github.com/vital-ai/kgraphlang

KGraphService is implemented in the repo:

https://github.com/vital-ai/kgraphservice

The Vital LLM Ensemble Reasoner, which uses KGraphLang is implemented in:

https://github.com/vital-ai/vital-llm-reasoner

The vLLM-based server running the Ensemble Reasoner is implemented in:

https://github.com/vital-ai/vital-llm-reasoner-server

Next steps

A follow-up article will present an implementation of KGraphLang predicates based on sample datasets to make it easy to run examples, and subsequent articles will explore deploying Ensemble Reasoning with kgraphlang.

If you are interested in utilizing kgraphlang as part of your Reasoning LLM and A.I. Agent implementations, please contact us at Vital.ai!