Here’s a quick video demo of using GPT-3 (text-davinci-003) with a voice interface

I have found it quite interesting to experiment with the ChatGPT model since OpenAI released it recently.

I thought it would be quite fun to connect it up to a spoken interface, just like Amazon Alexa and Google Home AI Assistants.

I decided to go with the following approach: listen for a wake word, record audio until the speaker stopped speaking, transcribe that to text, use GPT-3 to generate output text, and then use Amazon Polly to generate speech, and then “play” the resulting sound in the browser.

Fortunately, the Haley.ai platform enables composing workflows that include models and other functionality. For transcribing audio, the Whisper model was selected. To use the current OpenAI API interface, the latest GPT-3 (text-davinci-003) model was used with a prompt similar to the ChatGPT prompt (since ChatGPT is not yet released for API access). The Amazon Polly voice “Joanna” was selected, which is one of the “Neural” voices which support a limited subset of the SSML speak tags.

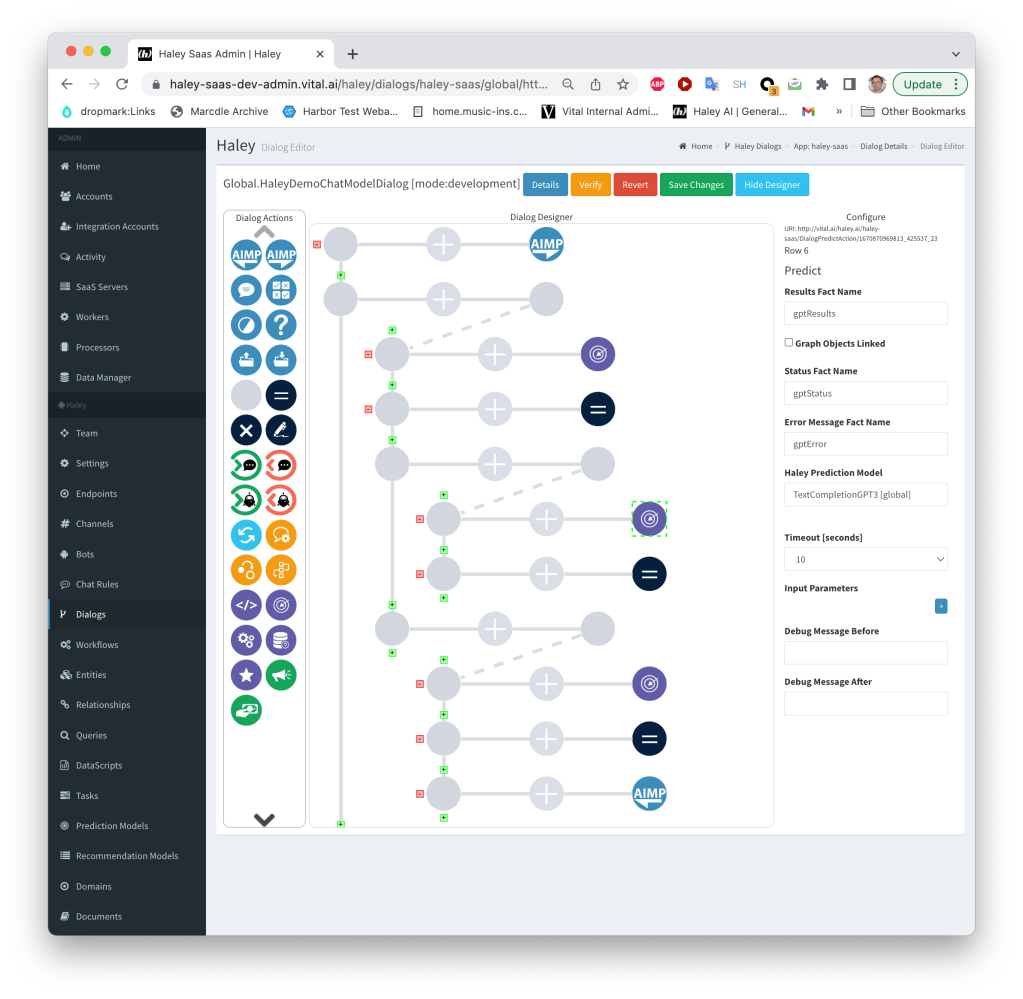

The models were composed together with the following workflow:

The screenshot shows the Haley Workflow editor. The 3 models are composed together with the result of the Polly model being sent back to the browser.

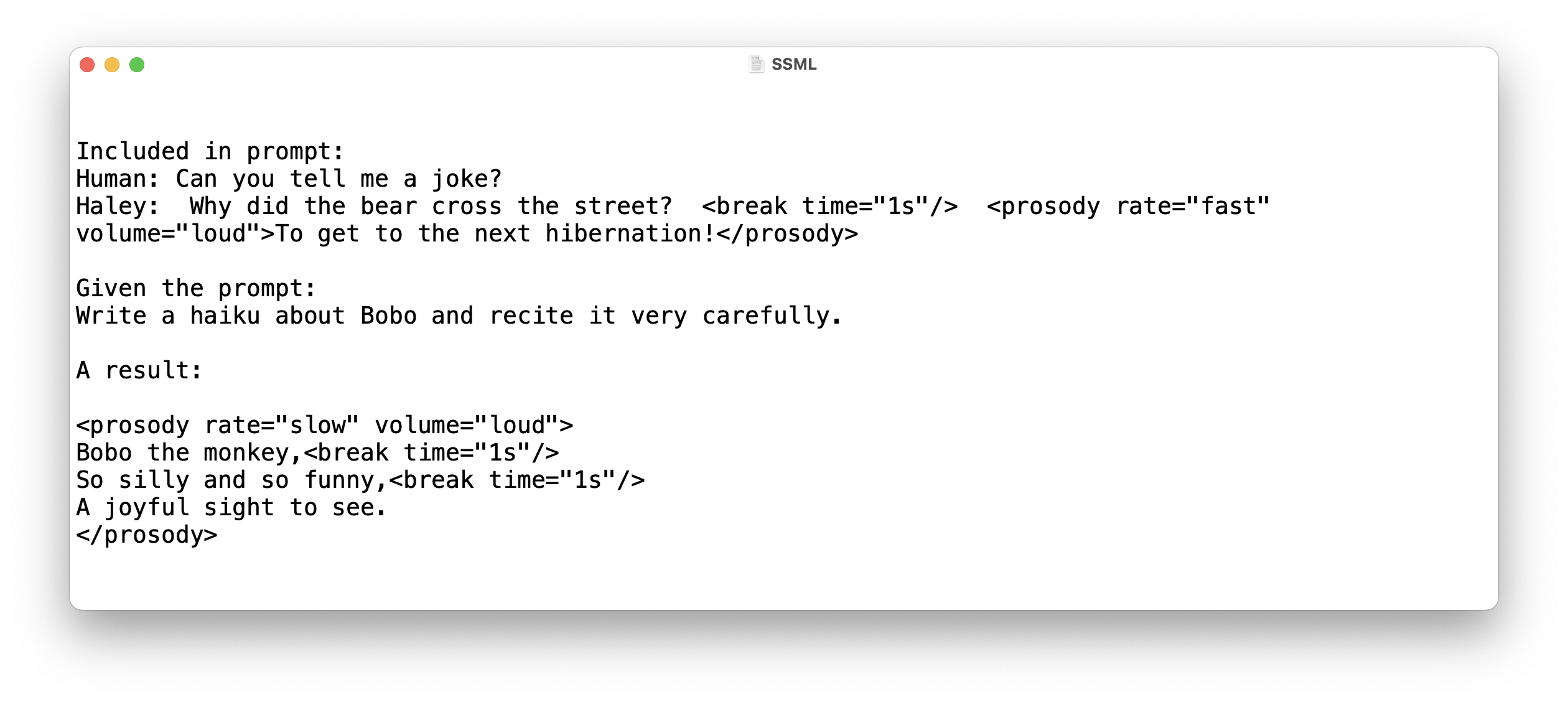

Speaking of Polly, the prompt used with GPT-3 shows some examples like the “prosody” tag which affects the Polly output, such as the haiku below:

Recent chat interactions are included in the prompt to give GPT-3 a degree of memory and the history of the interaction.

The Haley.ai platform takes care of messaging and running the workflow, as well as the embedded user interface displaying the chat messages.

Within the browser, we needed a wake-word to start the voice recording, and a way to track voice activity so that we can stop recording and send the audio recording to Haley.ai to process with the workflow.

Fortunately, some open-source projects do the heavy lifting for these tasks.

For wake word detection, I used: https://github.com/jaxcore/bumblebee-hotword

And to detect voice activity, including when speaking has stopped, I used: https://github.com/solyarisoftware/WeBAD

I’m hoping to make the voice detection and recording a bit more robust and then publicly release the result.