In Chat.ai, we’re looking to improve voice access to artificial intelligence. Converting Speech-to-Text is a critical component of interacting with people. Once speech is converted to text it can be fed into subsequent steps to understand the meaning of the text and then generate a response.

The article will discuss using the Whisper Speech-to-Text model within the browser including a demo application.

Applications of Artificial Intelligence (AI) make use of various kinds of models including Transformer models such as Large Language Models (LLMs) like GPT-4 or Speech-to-Text models like Whisper.

An important aspect of an AI application is how the models are deployed on servers and devices.

How models are deployed affects the flow of information and the latency — how quickly the AI application can respond. ChatGPT found great success in part by streaming incremental output back to users, giving the experience of activity and low latency while the model was completing its task.

In general, the closer a model can be deployed to the end user the better, as this reduces latency and improves responsiveness. The ideal deployment is on “edge” devices that users are directly interacting with, whether desktops, laptops, or mobile devices.

On such edge devices, web browsers such as Google Chrome and Apple Safari are the most common user interfaces.

So, running models within browsers is ideal for deployment. But, browsers are running on limited hardware and there may be privacy and security constraints. Therefore, a balance must be struck between what can be deployed with the browser and what should run in the cloud with more significant infrastructure and with higher security and privacy standards. An application can be designed to have certain activity happen on the edge device and other activity happening in the cloud in one unified and seamless user experience.

There is rapid and ongoing development of software libraries that support running models within browsers, and browsers are adopting standards such as WebGL and WebGPU to provide APIs to help optimize running the models.

One such library is Transformers.js ( https://github.com/xenova/transformers.js ) which added support for WebGPU in January 2024 and supports an interchange standard for models called ONNX (Open Neural Network Exchange).

The Whisper model comes in various sizes. The “Whisper Large” model has around 1.5 Billion parameters. Several service providers including OpenAI provide API access to the Whisper Large model in the cloud using significant server and GPU hardware which is necessary for models of that size. However, the “Whisper Tiny” model has around 39 Million parameters and is much more suitable for being deployed within a browser.

Fewer parameters means less accuracy and coverage, but that may be an acceptable trade-off, depending on the particular application. The application can choose to use the edge device model when it can and roll-over to the larger model in the cloud as necessary. This is in part a cost consideration as it’s cheaper to use the edge device hardware when possible and roll-over to the infrastructure in the cloud as necessary, with costs increasing with the amount of infrastructure deployed to support the application.

The Transformers.js library can use the Whisper Tiny model and there is a great demo of it for Speech-to-Text here: https://huggingface.co/spaces/Xenova/whisper-web

This demo uses a web application written using the React web framework, whereas it would be nice to have a separate JavaScript library to drop into any web application.

We created such a library here: https://github.com/vital-ai/vital-stt-js

The library is open source and needs some cleanup and improvement, but sufficient for some usage testing!

In order to test the capability of the model + browser combination, we created a demo by pairing the Whisper model with a “wake word” to activate transcribing speech. The application is available in the repo: https://github.com/chat-ai-app/chat-ai-assistant-demo

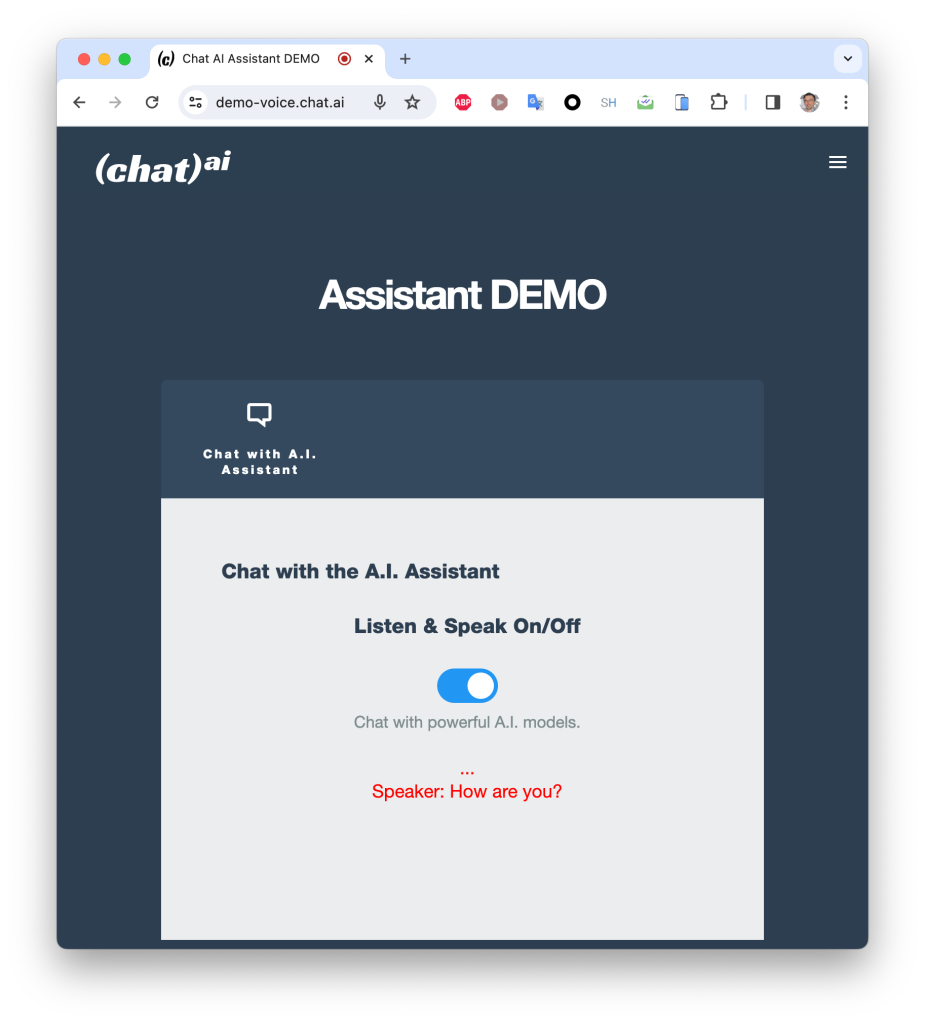

and the demo is deployed here: https://demo-voice.chat.ai/

The “wake word” is the phrase “Hey Haley”. “Haley” is the name of our AI Assistant.

The demo displays the text that was transcribed from the speaker. The text is not further processed to generate a response from the AI Assistant. We’re just testing the transcription part in this demo.

I’ll post a separate blog entry on developing the wake word model, which uses an open source library called OpenWakeWord for training: https://github.com/dscripka/openWakeWord

and an open-source JavaScript library for deployment: https://github.com/vital-ai/vital-wakeword-js

In some initial tests on laptops, transcribing a short phrase like “What’s the weather tomorrow in Brooklyn” takes about 1 second, but this should improve with some testing of different configuration settings and enabling further optimizations to utilize the resources of the underlying hardware like WebGPU.

The “wake word” also needs additional training to make it more robust. It may take a few attempts to trigger the wake word which should sound a “ding” when activated.

If you are a developer, you may wish to open up the JavaScript console to see some logging of activity.

When using the demo application, it should request access to the microphone and you should slide the toggle to the right to have the demo listen for the wake word.

It loads up the wake word model after sliding the toggle, and then loads the Whisper model when the first transcription is attempted, so the first few interactions may have some delays while these steps are occurring.

Please let us know any feedback on the demo in comments, and if you are a developer please consider contributing to the libraries linked above!